Last week I attended the Nebraska Library Leadership Institute (NLLI), which is held every other year at the Saint Benedict Center in Schuyler, NE. I got a lot out of the week, and I am thankful that my library provides me with opportunities like this.

The week consisted of a lot of group activities, many which were similar to group work in college, but the focus wasn’t on the work itself but how individuals in the group led and how they could be more effective. While I found the material interesting, I wasn’t sure how I would relate it to my job, and it brought up my recurring feeling that I wasn’t doing enough or that I should be looking for a job with more leadership potential. I talked with a lot of people about what they did and did a lot of thinking about what I want to do. Incidentally, I was told by a few people I had the “coolest job there,” and while I don’t think that’s true it was nice to hear.

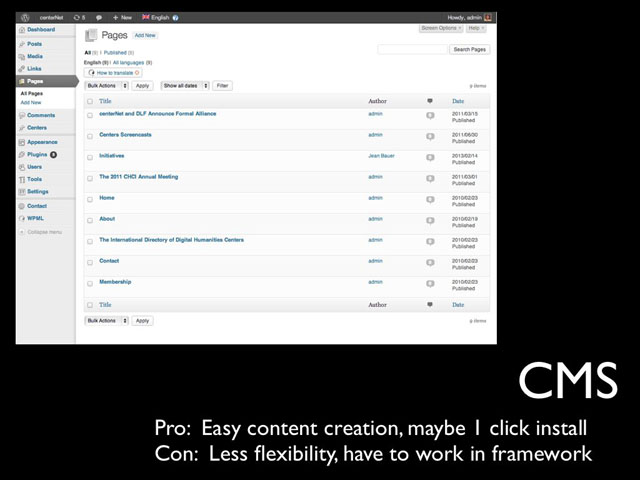

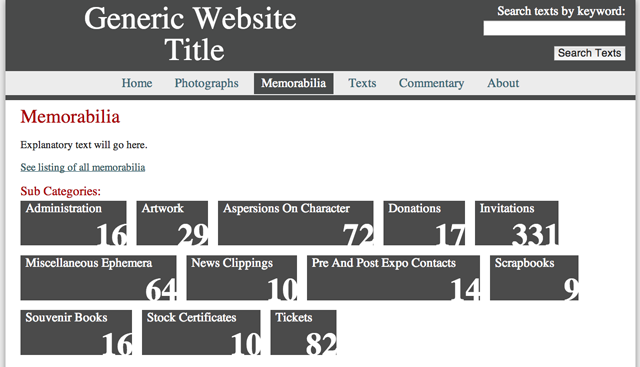

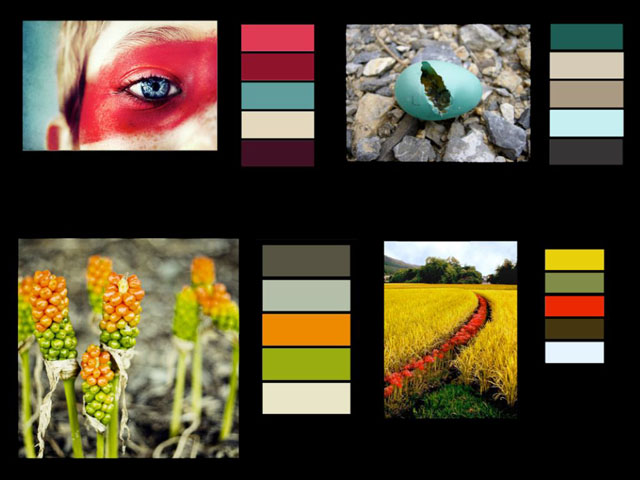

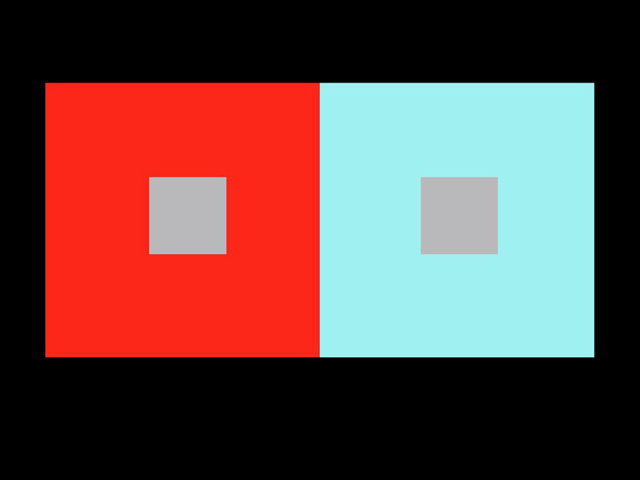

My wavering went on all week until the final day when we were asked to make a personal action plan, with actions for myself, my organization, my community, and the profession. This was when the lessons of the week came into focus for me. I decided that, for now, I want to focus my energies on a different kind of leadership. Namely, I want to lead by developing my tech and design skills to the point where I (hopefully) lose some of the imposter syndrome I constantly carry with me by being able to speak with authority on matters of technology and DH website creation. At that point I will reassess whether I want a more formal leadership position.

To that end, I made the following goals, which I am putting here in the hope that doing so will make me follow through. The timeline for all this is a year, at which point I will reassess.

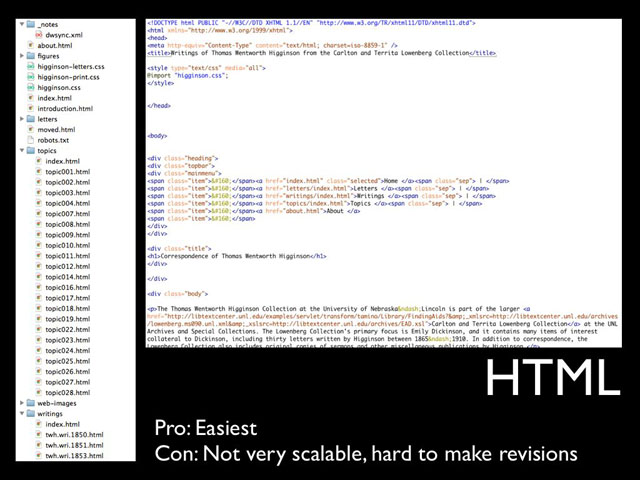

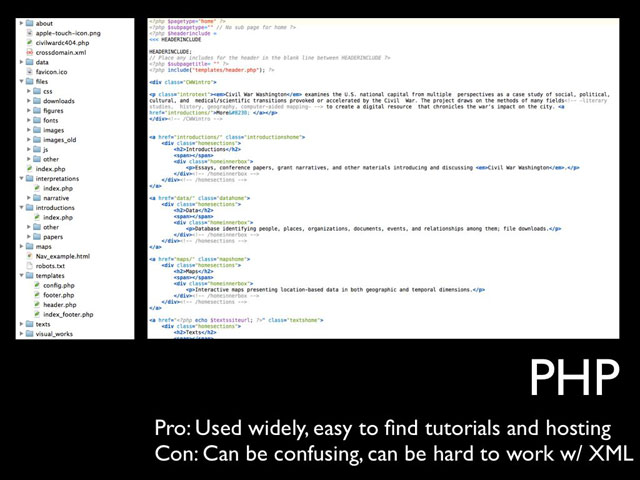

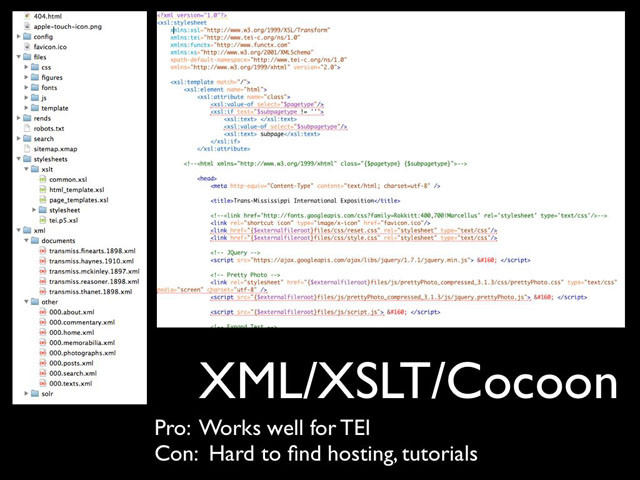

For myself: I will increase my technological knowledge, in particular knowledge about which technology to use for which purpose. Actions that will move me towards this goal: 1: Redo one of our sites in another technology (aka, learn another programming language). 2: Meet with others to talk about code 3: Start keeping code on github. In addition, I will start blogging here more often. This has always been a problem for me—I hate blogging about tech because I feel like everything I’d have to say is so beginner level and obvious.

One of my colleagues has proposed starting a “Women who Code” group in Lincoln, so I think that will go a long way towards goal 2.

I need to do a bit more thinking about what goals for “my organization, profession, and community” will be. Is my organization my library, or the center I work in? What kinds of professional activities should I be involved in? Which professional organizations should I immerse myself in? I currently have a list of 6 I have been in or am interested in, but I think I need to focus on one or two if I am to accomplish anything. What professional development opportunities should I pursue?

Over the next few months I will develop a learning/action plan for myself more fully, but for now it’s nice to have something of a plan. I’ve been feeling a bit lost professionally lately, and the NLLI gave me the kick I need to get on track.